Rossum R&D: Lessons Learned from Scaling LLM Translations

If you’re working with enterprise software, you know the challenge all too well: your front-end app’s translation keys keep multiplying, and the number of supported languages grows steadily. Initially, we relied on a traditional translation service, but that approach came with delays, high costs, and a time-consuming review process. You need to communicate with the account manager, plan translation of the new keys in advance, react to translator questions, etc. English entries always stayed fresh, but other languages often lagged behind, leading to inconsistencies. We knew there had to be a better way. So, during a hackathon, we decided to rethink our approach and explore the potential of large language models to streamline the translation process.

From concept to implementation

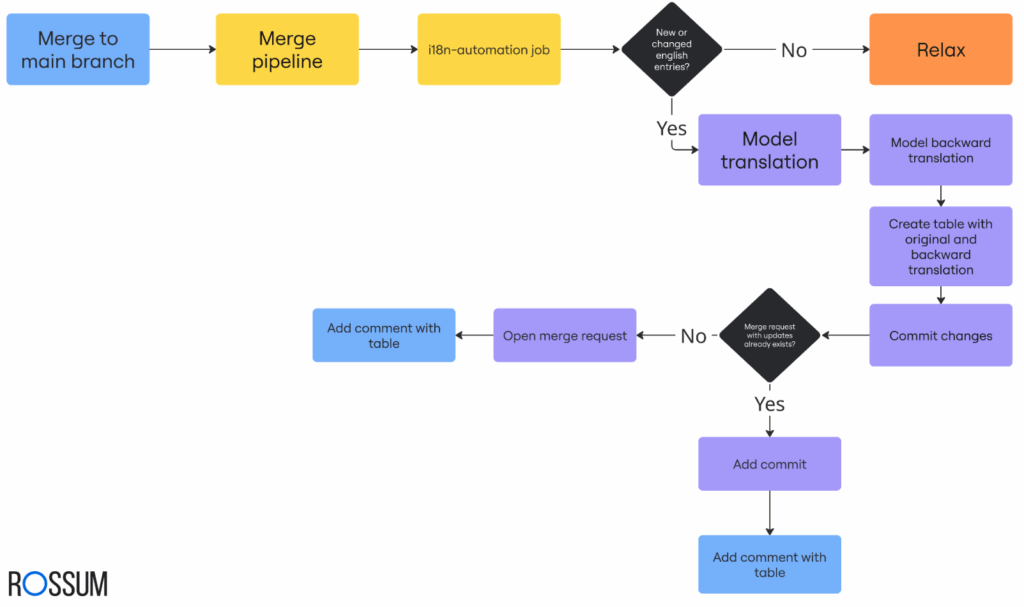

Our vision was to create a fully automated pipeline that would handle everything – from detecting changes in English translations to opening merge requests for review (because we store translation key-value pairs in the codebase). The diagram we created during the hackathon served as a blueprint for this system. Here’s how it worked in practice:

- When new or updated English strings were detected (by comparing the latest tagged commit on the main branch with the current commit), the pipeline triggered an automation job.

- This job compared the latest English entries with the previous version and identified all new and modified keys.

- We chose to use a large language model (Claude 3.5 Sonnet) because it can effectively handle contextual meaning, even when terms have specialized meanings within our internal system. It also supports large context windows, allowing us to provide previously human-translated keys as context, ensuring consistency and accuracy. Although this model is costly, we found the quality well worth the expense (more about model choice in next section).

- To ensure accuracy, the system performed a reverse translation: it translated the new entries back to English and compared them with the originals. This was done because the reviewer was expected to be a developer approving the merge request. Since we support languages with non-Latin scripts (like Japanese and Chinese), it would be impossible for one person to check all language mutations.

We decided to run the automation job as often as possible to keep updates small and easy to review.

By comparing the back-translated English to the original, we could easily spot potential inaccuracies or awkward phrasings. Reviewers no longer needed to be fluent in every supported language – they simply needed to verify that the meaning was preserved. This step not only improved quality but also built trust in the system.

A merge request note was generated, displaying the original English text, the LLM-generated translation, and the back-translated English for each key where discrepancies were found. This table format highlighted possible errors, making it easy for reviewers – regardless of language proficiency – to spot potential issues.

The system then summarized the changes, created a concise commit message, and automatically opened a merge request with the updated translation files and the comparison table attached.

Automated LLM driven translation process.

This approach gave us a clear, repeatable process that improved both speed and accuracy while dramatically reducing manual effort.

Why we landed on Claude 3.5 Sonnet

Large-language-model (LLM) choice is a moving target, so we benchmarked in May 2025 before our production cut-over. At that moment, Claude 3.5 Sonnet won on the criteria that mattered to us:

- External quality leader. A Lokalise study from February 2025 pitted five engines against human raters; Sonnet took first place across all eight language pairs tested.

- Massive context window. It features a 200k-token context and 16k-token completion limit. That’s enough to prepend our glossary and the entire previous release’s keys and still keep batches under one request.

- Price still beats people. At $3 / M input tokens and $15 / M output tokens, a typical 600-word feature branch costs ≈$0.018 – a fraction of average enterprise human rates.

- Placeholder fidelity. In our first weeks of observation, Sonnet preserved every ICU placeholder and Markdown tag perfectly.

- Glossary obedience. When instructed not to translate specific terms like “AI engine,” Sonnet sticks to it; smaller open models often drifted to “AI motor.”

To provide some context, here is a simplified look at the competitive landscape as of early 2025.

| Model | Organization | Context (input) | Key strength |

| Claude 3.5 Sonnet | Anthropic | 200k | #1 in Lokalise MT study; strong glossary control |

| GPT-4o | OpenAI | 128k | Tops many translation benchmarks; best multimodal |

| Gemini 1.5 Pro | 2M | Industry-leading context window, low latency at scale | |

| Qwen 2.5-Max | Alibaba | 128k | Claims to surpass GPT-4o on MT tasks; strong open model |

| NLLB-200 | Meta | ~1k | Best coverage for low-resource languages (200 langs) |

As of today (August 2025), we would probably go with the Claude Sonnet 4.

From hackathon to production-ready service

It took us a while to clean up the code, make it readable, handle errors properly, and ensure that model usage was being tracked effectively. We also focused on thorough documentation so that anyone brave enough to dive in could understand it without a PhD in cryptography (which turned out not to be very true after some time 😅). After what felt like a million tweaks and adjustments, the service finally looked polished enough to launch. So, we decided to flip the switch and go live – like any ambitious team that thinks they can automate the world in a weekend.

Ambitious team that thinks they can automate the world in a weekend.

The surprise in the bill

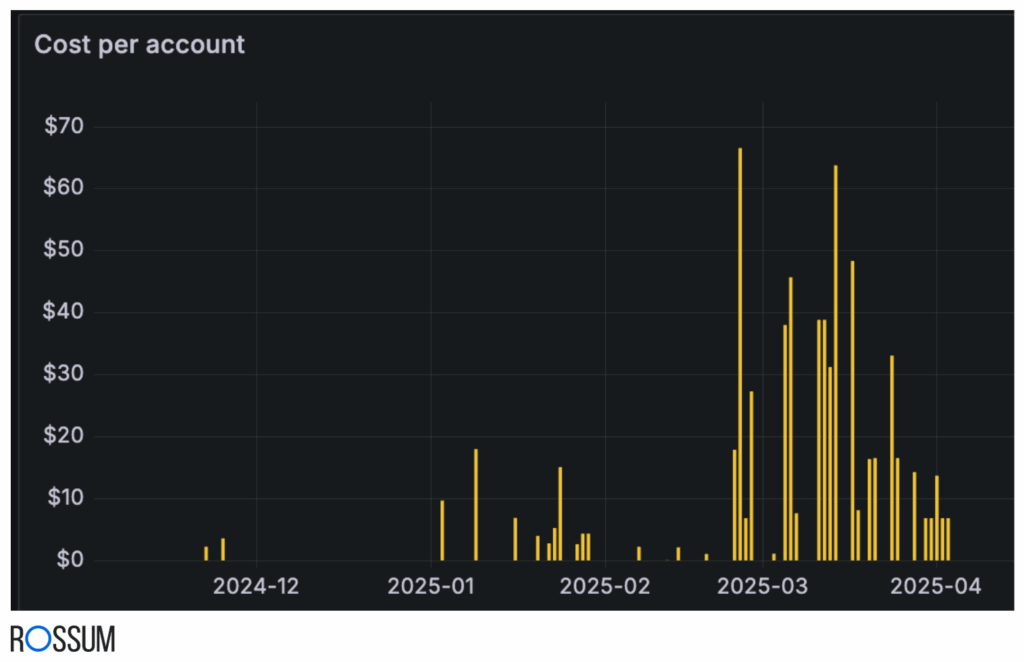

After the service ran in production for about four weeks, we were patting ourselves on the back. Translations were flowing, reviews were a breeze, and our initial budget projections looked solid. We were so confident that we’d already canceled our legacy human translation service. We were living the automation dream.

That dream came to a screeching halt when someone from finance gently inquired why our LLM usage was costing more than the service we had just fired. Suddenly, we were racking up hundreds of dollars a month. It turned out our “set it and forget it” service required, well, not forgetting it.

Can you guess when we launched the service to production?

The culprit was painfully obvious in hindsight. After an initial month of diligent monitoring, everyone… stopped caring 😏. New merge requests from the service would appear, then sit open for weeks like an abandoned snack in the office fridge. Because our pipeline used the last merged commit as its starting point, every new run would re-translate not just the latest changes, but all previously unmerged changes too. With our reverse-translation feature doubling the token count for every run, the jobs grew bigger and the costs ballooned exponentially.

It was a classic case of automation creating more work – and expense – the moment we stopped babysitting it.

Source: imgflip.com.

The course correction

First things first, we slammed the brakes on the automated trigger. Then, we asked a terrifyingly simple question: how often were those “oh-so-clever” reverse translations actually used for quality checks? The answer, of course, was almost never. (Surprise, surprise.)

So, we bravely turned that feature off for now. We also demoted the job from its automatic glory to manual execution, giving us full, iron-fisted control over when it decided to grace us with its presence. The costs plummeted.

Feeling confident again, we tackled new languages. Naturally, new issues popped up. Even with a generous context window, we had to batch our keys. This worked beautifully until we hit Finnish, whose legendary verbosity caused our batches to overflow the context window all on their own. It was a thrilling detective mission to unearth that gem. The solution: even smaller batches. Problem solved… for now.Then came the truly tricky terms. The model had a vague idea of how certain words translate – for example, helpfully changing our “AI engine” to “AI motor” in several languages 🤣. This was an adventure worthy of its own saga. To fix it, we forged a “sacred map” – a context file with explicit rules and term-for-term translations – to guide the model. Miraculously, it seems to be working. Though to be fair, evaluating the true cost-effectiveness of this linguistic marvel will require a much longer, more agonizing period of monitoring.

Even if the backward-translation checks are turned off, we still think this QA check does make sense and can help spot inconsistencies and errors.

Translation map: example screenshot of the backwards translation merge request note table.

Key takeaways

- Automation’s double edge: While LLMs offer incredible efficiency gains, unchecked automation can lead to unexpected costs and complexities, making human oversight indispensable.

- Context is king (and costly): Leveraging contextual information significantly improves translation quality, but be prepared for increased model usage and meticulously monitor its impact on your budget.

- The unseen burden of unmerged changes: Neglecting to promptly merge automated translation updates can snowball into larger, more expensive translation jobs and diminish the benefits of automation.

- Adapt and iterate: Be prepared to continuously refine your approach, whether it’s adjusting batch sizes for verbose languages or providing explicit linguistic rules for specific terms.

- Trust through transparency: Implementing mechanisms like reverse translation (even if you later run them just for ad-hoc checks), helps build initial confidence in automated systems, especially when reviewers aren’t fluent in all target languages.

Conclusion

Our expedition from a hackathon concept to a live translation service revealed that LLMs are powerful allies in scaling translations, but they demand a delicate balance of automation, vigilance, and human ingenuity. The true art lies not just in deploying these models, but in mastering their unpredictable quirks and constantly fine-tuning the symphony between AI and human intelligence to ensure both linguistic accuracy and fiscal responsibility. Embrace the LLM revolution, but remember: the most effective automation is always guided by thoughtful engineering and relentless learning.